At Callstack, we believe that using AI, whether on-device or in the cloud, should be simple and consistent for React and React Native developers. Guided by this principle of a unified API, we are expanding our on-device AI SDK provider to include support for Embeddings.

This new feature, powered by Apple's built-in Natural Language framework, unlocks crucial capabilities like semantic search and content classification without requiring any additional model downloads for your users. Here's how to get started.

import { embed } from 'ai'

import { apple } from '@react-native-ai/apple'

const { embedding } = await embed({

model: apple.textEmbeddingModel(),

value: 'React Universe Conf is next month, go get your ticket now!',

})Read on to learn more about the model's architecture and the advantages of this on-device approach.

What are embeddings?

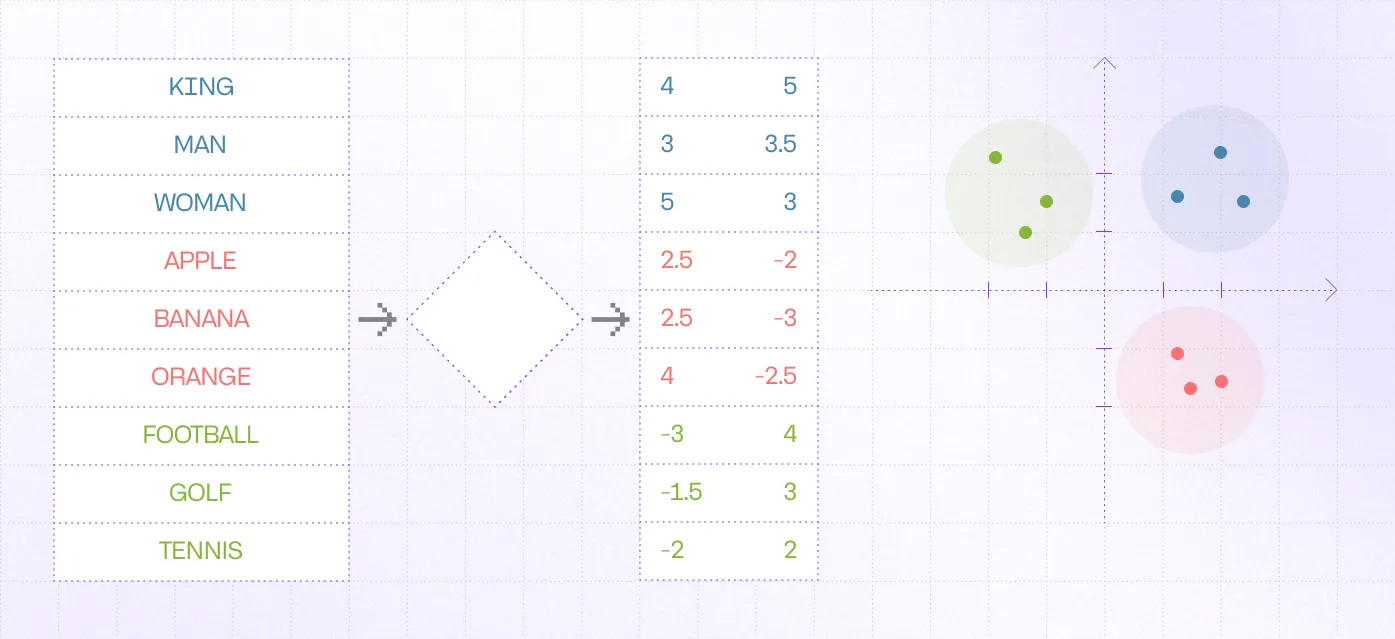

In the context of Natural Language Processing (NLP), an embedding is a numerical representation of a piece of text, be it a single word, a sentence, or an entire document. This representation takes the form of a vector, a list of numbers, that captures the text's semantic meaning.

Think of it as a coordinate system for language. Words and sentences with similar meanings will have vectors that are "close" to each other in this high-dimensional space. This concept unlocks a range of applications, such as:

- Semantic Search: Finding documents that are conceptually similar to a query, not just those that share keywords.

- Text Classification: Categorizing articles, emails, or user feedback based on their content.

- Clustering: Grouping similar pieces of text together without prior labels.

- Recommendation Engines: Suggesting items based on textual descriptions.

On-Device vs On-Demand

The rise of powerful AI models has led to a common challenge: model distribution. Most third-party models require developers to bundle a large model file (often several hundred megabytes) within their app or force the user to download it upon first launch. While feasible for specific use cases, this approach is unsustainable for broader market adoption, or even impossible to make the app grow quickly in popularity in some cases. If every app that wants to use AI downloads its own separate model, users' devices will quickly fill up.

Apple takes a more integrated and efficient approach. Many of its advanced AI models, including the one we're discussing today, are treated as shared assets.

When an application requests a model for the first time, the operating system downloads it to a shared asset catalog. This model is then available not only to the requesting app but to any other app on the system that needs it. If the model is already present, it's available instantly.

This system-level management means:

- Reduced App Size: Your app bundle doesn't need to include the model.

- Efficient Storage: A single model download serves all apps.

- Instant Access: If another app has already downloaded the model, yours can use it immediately.

It's the same foundation behind many other on-device models from Apple, which we will be exploring in the coming weeks.

Overview of Apple's Embeddings API

For years, developers had access to NLEmbedding within the Natural Language framework. This API provided word embeddings, generating a vector for each individual word.

While useful, NLEmbedding had a fundamental limitation: it lacked contextual understanding. The vector for the word "bank" would be the same in "river bank" and "investment bank." True semantic understanding requires comprehending the entire sentence.

This is where the new API comes in. NLContextualEmbedding is a modern, sentence-based transformer model. Instead of looking at words in isolation, it analyzes the entire string to produce a single vector that represents its collective meaning. This provides a significant improvement in semantic representation.

Overall, NLContextualEmbedding is:

- Built on a BERT (Bidirectional Encoder Representations from Transformers) architecture, a model type that excels at understanding relationships between words in a sentence.

- Not a single model. There are three different underlying models based on the language being processed, ensuring high-quality results across multiple languages.

- Producing a 512-dimensional vector and can process up to 256 tokens per request.

The vector's dimension describes the richness of its semantic representation. Higher number allows the model to capture more subtle nuances in meaning. While many popular compact models designed for mobile use, like all-MiniLM-L6-v2, use a smaller dimension (e.g., 384), Apple's choice of 512 dimensions is a promising indicator of the model's capacity for high-fidelity language understanding. The 256-token sequence length is a standard for many BERT-based models of this class, balancing performance with the ability to process meaningful chunks of text.From token vectors to a single sentence embedding

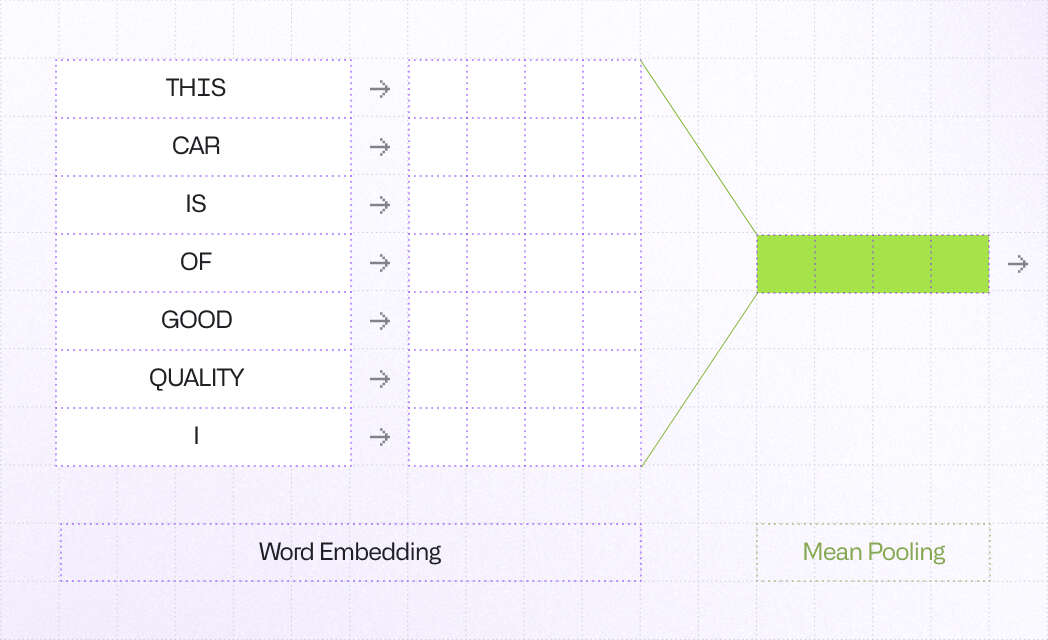

A critical detail of how NLContextualEmbedding works is that it doesn't return one vector for an entire sentence directly. Instead, it generates a distinct, context-aware vector for each token (a word or sub-word piece). This provides a rich, granular output, but for many applications and APIs, including the Vercel AI SDK's function, a single vector representing the entire sentence is required.

To bridge this gap, we aggregate these token vectors into a single sentence embedding using mean pooling, a technique that calculates the element-wise average of all vectors.

To ensure this operation is performed with maximum efficiency, we leverage Apple's low-level Accelerate framework, specifically the vDSP library. This allows us to offload the complex math to highly-optimized code that runs directly on the CPU's specialized hardware, computing the final sentence embedding with negligible overhead.

First-class Vercel AI SDK support

We've made integrating these capabilities as straightforward as possible. With the react-native-ai library, you can generate an embedding from a string by using either embed or embedMany from AI SDK.

import { embedMany } from 'ai'

import { apple } from '@react-native-ai/apple'

const { embeddings } = await embedMany({

model: apple.textEmbeddingModel(),

values: ['React Universe Conf', '3-4 September 2025', 'Wroclaw'],

})The result is a high-dimensional vector that you can then use for a variety of on-device AI tasks, such as finding the similarity between two pieces of text using cosine similarity calculations.

Conclusion

Our goal is to bring the power of native, on-device frameworks from Apple (and soon, Android) to help you build intelligent and personalized apps, without you having to deal with the complexities of each platform's specific APIs. This is where React Native truly shines, abstracting away platform differences under a unified interface, in this case, the Vercel AI SDK.

We encourage you to explore the documentation, experiment with the new embeddings API, and see what you can build. We look forward to seeing the innovative solutions that emerge from the community.

Learn more about AI

Here's everything we published recently on this topic.